Example 4: Use GLMdenoise, allowing voxel-specific HRFs

Contents

Download dataset (if necessary) and add GLMdenoise to the MATLAB path

setup;

Load in the data

load('exampledataset.mat');

whos

Name Size Bytes Class Attributes

data 1x10 173671520 cell

design 1x10 9600 cell

stimdur 1x1 8 double

tr 1x1 8 double

Outline the strategy

Step 1: Initial call to GLMdenoise to learn the noise regressors

hrf = getcanonicalhrf(stimdur,tr)';

results = GLMdenoisedata(design,data,stimdur,tr, ...

'assume',hrf,struct('numboots',0), ...

'example4figures');

noisereg = cellfun(@(x) x(:,1:results.pcnum),results.pcregressors,'UniformOutput',0);

noisereg

*** GLMdenoisedata: generating sanity-check figures. ***

*** GLMdenoisedata: performing cross-validation to determine R^2 values. ***

cross-validating model..........done.

computing model fits...done.

computing R^2...done.

computing SNR...done.

*** GLMdenoisedata: determining noise pool. ***

*** GLMdenoisedata: calculating noise regressors. ***

*** GLMdenoisedata: performing cross-validation with 1 PCs. ***

cross-validating model..........done.

computing model fits...done.

computing R^2...done.

computing SNR...done.

*** GLMdenoisedata: performing cross-validation with 2 PCs. ***

cross-validating model..........done.

computing model fits...done.

computing R^2...done.

computing SNR...done.

*** GLMdenoisedata: performing cross-validation with 3 PCs. ***

cross-validating model..........done.

computing model fits...done.

computing R^2...done.

computing SNR...done.

*** GLMdenoisedata: performing cross-validation with 4 PCs. ***

cross-validating model..........done.

computing model fits...done.

computing R^2...done.

computing SNR...done.

*** GLMdenoisedata: performing cross-validation with 5 PCs. ***

cross-validating model..........done.

computing model fits...done.

computing R^2...done.

computing SNR...done.

*** GLMdenoisedata: performing cross-validation with 6 PCs. ***

cross-validating model..........done.

computing model fits...done.

computing R^2...done.

computing SNR...done.

*** GLMdenoisedata: performing cross-validation with 7 PCs. ***

cross-validating model..........done.

computing model fits...done.

computing R^2...done.

computing SNR...done.

*** GLMdenoisedata: performing cross-validation with 8 PCs. ***

cross-validating model..........done.

computing model fits...done.

computing R^2...done.

computing SNR...done.

*** GLMdenoisedata: performing cross-validation with 9 PCs. ***

cross-validating model..........done.

computing model fits...done.

computing R^2...done.

computing SNR...done.

*** GLMdenoisedata: performing cross-validation with 10 PCs. ***

cross-validating model..........done.

computing model fits...done.

computing R^2...done.

computing SNR...done.

*** GLMdenoisedata: performing cross-validation with 11 PCs. ***

cross-validating model..........done.

computing model fits...done.

computing R^2...done.

computing SNR...done.

*** GLMdenoisedata: performing cross-validation with 12 PCs. ***

cross-validating model..........done.

computing model fits...done.

computing R^2...done.

computing SNR...done.

*** GLMdenoisedata: performing cross-validation with 13 PCs. ***

cross-validating model..........done.

computing model fits...done.

computing R^2...done.

computing SNR...done.

*** GLMdenoisedata: performing cross-validation with 14 PCs. ***

cross-validating model..........done.

computing model fits...done.

computing R^2...done.

computing SNR...done.

*** GLMdenoisedata: performing cross-validation with 15 PCs. ***

cross-validating model..........done.

computing model fits...done.

computing R^2...done.

computing SNR...done.

*** GLMdenoisedata: performing cross-validation with 16 PCs. ***

cross-validating model..........done.

computing model fits...done.

computing R^2...done.

computing SNR...done.

*** GLMdenoisedata: performing cross-validation with 17 PCs. ***

cross-validating model..........done.

computing model fits...done.

computing R^2...done.

computing SNR...done.

*** GLMdenoisedata: performing cross-validation with 18 PCs. ***

cross-validating model..........done.

computing model fits...done.

computing R^2...done.

computing SNR...done.

*** GLMdenoisedata: performing cross-validation with 19 PCs. ***

cross-validating model..........done.

computing model fits...done.

computing R^2...done.

computing SNR...done.

*** GLMdenoisedata: performing cross-validation with 20 PCs. ***

cross-validating model..........done.

computing model fits...done.

computing R^2...done.

computing SNR...done.

*** GLMdenoisedata: selected number of PCs is 6. ***

*** GLMdenoisedata: fitting final model (no denoising, for comparison purposes). ***

fitting model...done.

preparing output...done.

computing model fits...done.

computing R^2...done.

computing SNR...done.

*** GLMdenoisedata: fitting final model (with denoising). ***

fitting model...done.

preparing output...done.

computing model fits...done.

computing R^2...done.

computing SNR...done.

*** GLMdenoisedata: calculating denoised data and PC weights. ***

*** GLMdenoisedata: converting to percent BOLD change. ***

*** GLMdenoisedata: generating figures. ***

noisereg =

Columns 1 through 4

[265x6 single] [265x6 single] [265x6 single] [265x6 single]

Columns 5 through 8

[265x6 single] [265x6 single] [265x6 single] [265x6 single]

Columns 9 through 10

[265x6 single] [265x6 single]

Step 2: Re-analyze the data, tailoring the HRF voxel-by-voxel

xyzsize = [64 64 4];

numcond = 35;

opt = struct('extraregressors',{noisereg},'hrfthresh',-Inf,'suppressoutput',1);

hrfs = zeros([xyzsize length(hrf)],'single');

betas = zeros([xyzsize numcond],'single');

R2 = zeros([xyzsize],'single');

cache = [];

for xx=1:xyzsize(1)

fprintf('#');

for yy=1:xyzsize(2)

fprintf('.');

for zz=1:xyzsize(3)

data0 = cellfun(@(x) flatten(x(xx,yy,zz,:)),data,'UniformOutput',0);

[results0,cache] = GLMestimatemodel(design,data0,stimdur,tr, ...

'optimize',hrf,0,opt,cache);

hrfs(xx,yy,zz,:) = results0.modelmd{1};

betas(xx,yy,zz,:) = results0.modelmd{2};

R2(xx,yy,zz) = results0.R2;

end

end

end

#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................#................................................................

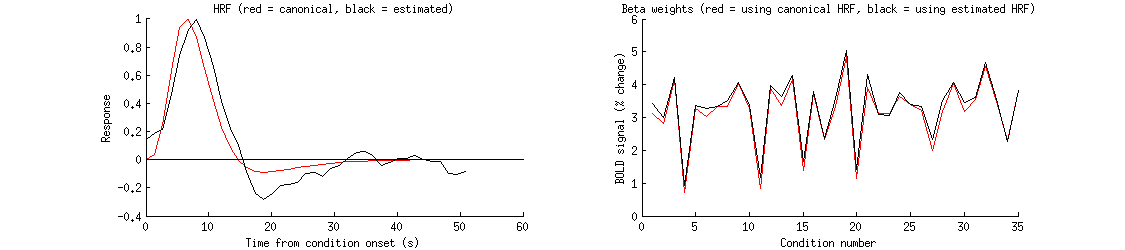

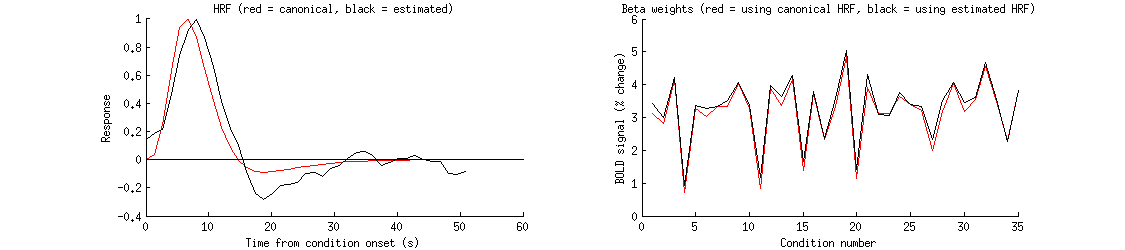

Inspect the results

xx = 53; yy = 27; zz = 1;

figure;

set(gcf,'Units','points','Position',[100 100 900 200]);

subplot(1,2,1); hold on;

plot(0:tr:(length(hrf)-1)*tr,hrf,'r-');

plot(0:tr:(length(hrf)-1)*tr,flatten(hrfs(xx,yy,zz,:)),'k-');

straightline(0,'h','k-');

xlabel('Time from condition onset (s)');

ylabel('Response');

title('HRF (red = canonical, black = estimated)');

subplot(1,2,2); hold on;

plot(flatten(results.modelmd{2}(xx,yy,zz,:)),'r-');

plot(flatten(betas(xx,yy,zz,:)),'k-');

straightline(0,'h','k-');

xlabel('Condition number');

ylabel('BOLD signal (% change)');

title('Beta weights (red = using canonical HRF, black = using estimated HRF)');